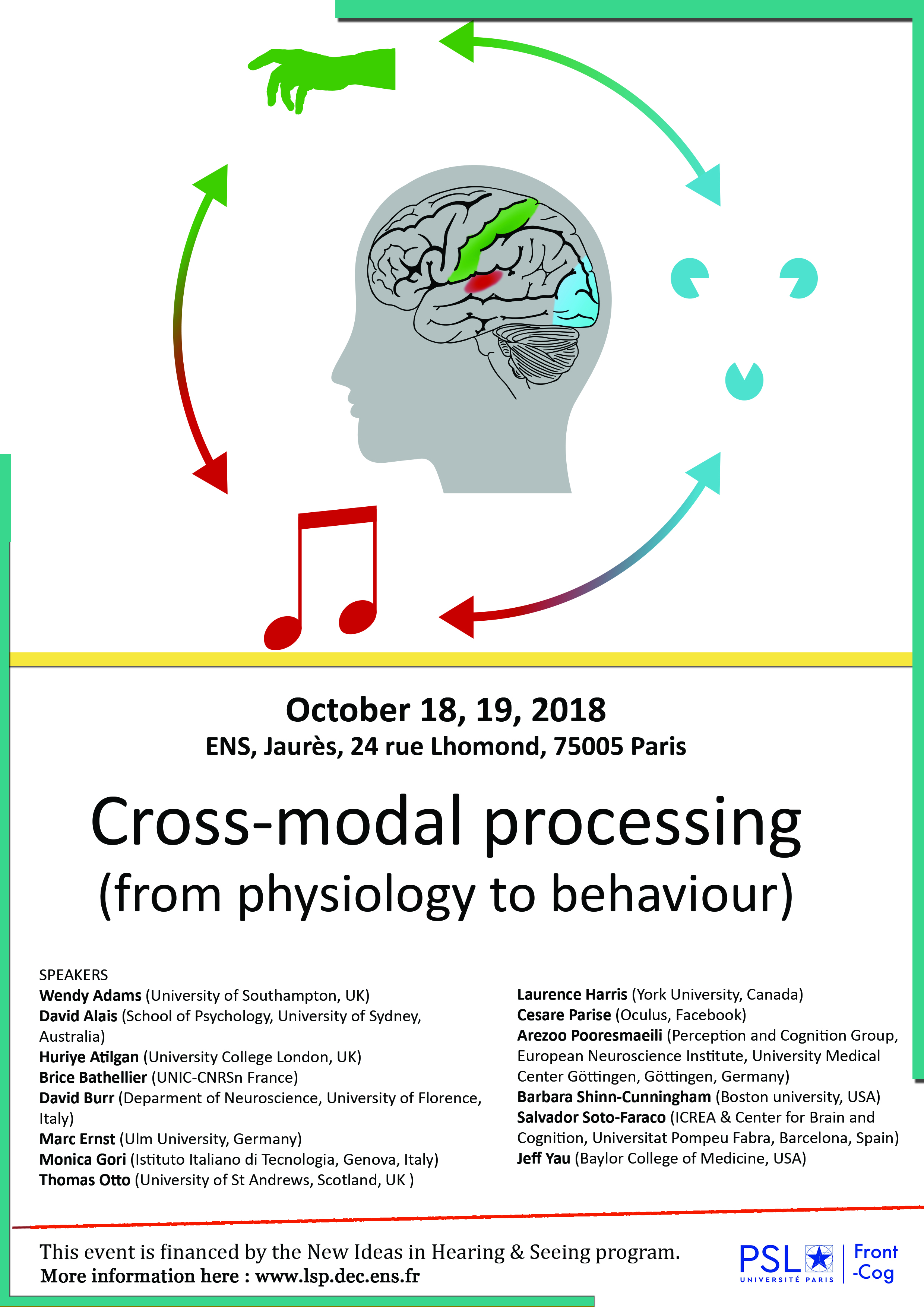

Cross-modal processing (from physiology to behaviour)

Salle Jaurès, 24 rue Lhomond, 75005 Paris

Cross-modal processing (from physiology to behaviour)

October 18-19, 2018 at ENS in Jaurès, 24 rue Lhomond, 75005 Paris.

This event is financed by the New Ideas in Hearing & Seeing programme.

Programme: (Download the program)

October 18

13h30 Welcome and presentation

Development of multisensory processing (Chair: Claudia Lunghi)

14h00 David Burr : "Cross-sensory integration and calibration during development"

14h40 Monica Gori : "Spatial and temporal cross-sensory calibration typical and impaired children and adults"

15h20 Marc Enrst : "Multisensory perception for action in newly sighted individuals"

16h00 Coffee Break

Vision and Touch (Chair: Jackson Graves)

16h30 Laurence Harris : "Lying, moving and sounds affect tactile processing"

17h10 Wendy Adams : "Understanding Material Properties from Vision and Touch"

17h50 General Discussion

20h00 Dinner

October 19

9h30 Coffee

Timing matters: physiology (Yves Boubenec)

9h40 Huriye Atilgan : "A visionary approach to listening: the role of vision in auditory scene analysis"

10h20 Brice Bathellier : "Context-dependent signaling of coincident auditory and visual events in primary visual cortex"

11h00 Coffee Break

Timing matters: psychophysics (Ljubica Jovanovic)

11h20 Cesare Parise : "Detecting correlations across the senses"

12h00 Thomas Otto : "Trimodal is faster than bimodal and race models can explain it all"

12h40 Lunch

Attention, reward and awareness (Giovanni Di Liberto)

14h Barbara Shinn-Cunningham : "Engagement of spatial processing networks"

14h40 Arezoo Pooresmaeili : "Integration of reward value across sensory modalities during perceptual and oculomotor tasks"

15h20 Salvador Soto-Faraco : "ltisensory Integration at the crossroads of Attention, Conflict and Awareness"

16h00 Coffee Break

Supramodal processing (Tarryn Baldson)

16h30 Jeff Yau : "Distributed and interactive cortical systems for audition and touch"

17h10 David Alais : "A common mechanism processes auditory and visual motion"

17h50 General Discussion

18h30 Cocktail

Abstracts:

Arezoo Pooresmaeili (Perception and Cognition Group, European Neuroscience Institute, University Medical Center Göttingen, Göttingen, Germany)

"Integration of reward value across sensory modalities during perceptual and oculomotor tasks"

Motivationally salient signals such as reward value have a profound effect on behavior. Stimuli associated with higher rewards enhance sensory processing and energize actions that lead to reward attainment. Recently, we showed that the modulating effects of reward on sensory perception even occur when reward cues are irrelevant to the task at hand and are delivered through a different sensory modality (Pooresmaeili et al. 2014). These results suggest that reward value of cues in one sensory modality can be integrated with those from a different modality. To shed light on the underlying mechanisms of this integration, we conducted 3 follow up experiments. In an oculomotor task, we found that reward value of visual, auditory or bimodal distractors affect the trajectory of saccadic eye-movements to a target. Importantly, reward value of bimodal distractors was integrated across vision and audition but only if they had overlapping spatial locations. In a second EEG experiment, we found that reward enhanced early electrophysiological correlates of auditory processing (measured as the amplitude of mismatch negativity ERPs), suggesting that reward effects could be partly mediated through enhancing bottom-up salience of stimuli. However, this early modulation disappeared and was replaced by a later component, when auditory cues served as distractors in a visual odd-ball detection task. These results show that bottom-up effects of reward could be completely overridden by top-down task demands especially if auditory and visual signals are not integrated as emanating from the same object. Finally, in a visual orientation discrimination task we directly compared auditory and visual reward signals when they could or could not be integrated with the target. Reward signals facilitated sensory perception when they were perceived to belong to the target but led to suppression when they were localized to different objects. Taken together, these results suggest that reward value can be integrated across sensory modalities but its net effect depends on whether optimal conditions for multisensory integration are provided or not.

Barbara Shinn-Cunningham (Boston university)

"Engagement of spatial processing networks"

Neuroimaging with fMRI shows that processing of both auditory and visual spatial information engages the same brain network— a network that has previously been associated with spatial visual attention. This network includes spatial control regions in frontal cortex, as well as retinotopically organized spatial maps in parietal cortex. This talk reviews both the fMRI evidence for the sharing of spatial processing of visual and auditory inputs, and EEG evidence supporting the view that degraded auditory spatial information (arising, e.g., from hearing impairment or from presentation of unnatural spatial cues) leads to poor engagement of this spatial attention network. These results have important implications for design of hearing aids and other assistive listening devices, which will be discussed.

Brice Bathellier (UNIC-CNRS)

"Context-dependent signaling of coincident auditory and visual events in primary visual cortex"

Detecting rapid, coincident changes across sensory modalities is essential to recognize sudden threats or events. Using two-photon calcium imaging in identified cell types in awake, head-fixed mice, we show that, compared to the overall AC population, a much larger fraction of auditory cortex (AC) neurons projecting to primary visual cortex (V1) represent loud sound onsets. In V1, a small number of layer 1 interneurons gates this cross-modal information flow in a context-dependent manner. In dark conditions, loud sound onsets lead to suppression of the V1 population. However, when loud sound onsets coincide with a visual stimulus, visual responses are boosted in V1. Thus, a dynamic, asymmetric circuit connecting AC and V1 specifically identifies visual events that are coincident with loud sound onsets.

Cesare Parise (Oculus, Facebook)

"Detecting correlations across the senses"

Multisensory integration is a process of redundancy exploitation where the brain combines redundant information from different sensory modalities to get more precise, accurate and faster perceptual estimates. To do so, the brain needs to constantly monitor the senses to detect which signals contain redundant (i.e., correlated) information. Correlation detection has been proposed as a general principle for sensory integration that is capable to account for several perceptual phenomena–including horizontal sound localization, visual detection of motion and binocular disparity. Here I will provide evidence that the same principle can likewise account for a variety of aspects of multisensory integration, such as causal inference, synchrony and temporal order detection, Bayesian optimal integration and body ownership.

David Alais (School of Psychology, University of Sydney, Australia)

"A common mechanism processes auditory and visual motion"

We tested for common processing of auditory and visual motion using visual or auditory stimuli drifting left or right at various speeds. Observers discriminated speed on each trial, comparing current speed to the average of all speeds (method of single stimuli), and mean perceived speed was calculated. We tested the relationship between consecutive trials in a sequential dependency analysis. Vision-only: motion was perceived faster after a fast preceding motion (and slower following a slow motion). This is a positive serial dependency or motion priming effect. Audition-only: the same positive dependency occurred for auditory motion, showing a positive priming effect. In the key condition, visual and auditory motion were alternated over trials. Whether vision preceded audition, or audition preceded vision, positive serial dependencies were observed: audition primed visual motion and vision primed auditory motion. Based on the strong cross-modal priming and the similar magnitude of serial dependency in all conditions, we conclude a common, supramodal mechanism processes motion regardless of visual or auditory input.

David Burr (Deparment of Neuroscience, University of Florence, Italy)

"Cross-sensory integration and calibration during development"

Much evidence suggests that humans integrate information between senses in a statistically optimal manner, maximizing the precision or reliability of performance. However, reliability-based integration matures only after about 8 years of age: in younger children one sense dominates the other. One possibility is that the dominance of one or other sense reflects the action of cross-modal calibration of developing systems, where one sense calibrates the other, rather than fusing with it to improve precision. Several clinical studies support this suggestion, and show how impairment of one sense (such as in blindness) can impact on other senses, such as touch and hearing.

Huriye Atilgan (University College London, UK)

"A visionary approach to listening: the role of vision in auditory scene analysis"

To recognize and understand the auditory environment, the listener must first separate sounds that arise from different sources and capture each event. This process is known as auditory scene analysis. This talk discusses whether and how visual information can influence auditory scene analysis. First, I present our psychophysical findings that temporal coherence between auditory and visual stimuli is sufficient to promote auditory stream segregation in a sound mixture. Then, I focus on our electrophysiological studies that provide mechanistic insight into how auditory and visual information are bound together to form coherent perceptual objects that help listeners to segregate one sound from a mixture.

Jeff Yau (Baylor College of Medicine)

"Distributed and interactive cortical systems for audition and touch"

We rely on audition and touch to perceive and understand environmental oscillations. These senses exhibit highly specific interactions in temporal frequency processing. Although the extensive perceptual interactions between audition and touch have long been appreciated, how cortical processing in traditionally-defined ‘unisensory’ brain regions support these interactions remains unclear. In the first part of my talk, I will present evidence that a number of brain regions exhibit frequency-selective responses – indexed by fMRI adaptation – to both auditory and tactile stimulation. These multimodal responses are distributed over cortical systems that adhere to traditional hierarchical organization schemes. In the second part of my talk, I will present evidence that the functional coupling between the somatosensory and auditory perceptual systems is gated by modality- and feature-based attention. These collective results imply that auditory and tactile frequency representations are distributed over extensive cortical systems whose connectivity may be dynamically regulated by attention.

Laurence Harris (York University, Canada)

"Lying, moving and sounds affect tactile processing"

Both the perceived timing and the perceived intensity of a touch can be influenced by the context in which it occurs. Here I show that static vestibular stimulation (either natural or artificially created by electrical stimulation affects the perceived timing of a touch, and that if a touch is applied to a moving limb its perceived intensity is reduced (tactile gating). The simultaneous presentation of a sound can also reduce the perceived intensity of a touch and these cross-modal effects seem to be additive. These findings add to the emerging understanding of the pervasive role of cross-modal effects in many aspects of cognitive processing.

Marc Ernst (Ulm University, Germany)

"Multisensory perception for action in newly sighted individuals"

Monica Gori (Istituto Italiano di Tecnologia, Genova, Italy)

"Spatial and temporal cross-sensory calibration typical and impaired children and adults"

It is evident that the brain is capable of large-scale reorganization following sensory deprivation but the extent of such reorganization is not clear to date. Many works show that the visual modality is crucial to develop spatial representations and the auditory modality is crucial to develop temporal representations. Blindness and deafness are ideal clinical conditions to study the reorganization of spatial and temporal representations when the visual or audio signals are not available. I will present our data on the development of cross-sensory spatial and temporal skills in typical, blind and deaf children and adults. Results show that blind and low vision children and adults are impaired in some audio and tactile spatial skills and deaf are impaired on visual temporal processing. These results support the importance of these modalities on the cross-sensory development of spatial and temporal representations. I will also present EEG results in blind and deaf individuals to support this idea showing that the sensory cortices have a pivotal role in building a high resolution and flexible spatial and temporal representations within the audio and visual modality and that these mechanisms are experience dependent. Finally, I will show that it possible to improve spatial representation skills in blind individuals with specific rehabilitation training. Previous works have shown that in sighted children the development of space representation is strictly related to the link between body movements and visual feedback. By 5 months of age, sighted infants start to watch movements of their own hands and reach out towards interesting objects. The onset of this successful sensory-motor association likely mediates the effects of visual experience on spatial representations in the sighted infant (Bremner et al., 2008). When the visual information is unavailable, the natural visual sensory feedback associated with body movement, and crucial for the development of space-representation, is missing. We investigated whether a new sensory-motor training based on audio-tactile-motor feedback associated with body movement can be used to improve spatial representation in blind and low vision children. 42 children between 3 and 15 years of age participated in 3 months of rehabilitation training with the new device. Results suggest that it possible to use audio feedbacks associated with arm movement (e.g. small audio speakers positioned on the child's wrist) to rehabilitate space representation in blind children.

Salvador Soto-Faraco (ICREA & Center for Brain and Cognition, Universitat Pompeu Fabra, Barcelona, Spain)

"ltisensory Integration at the crossroads of Attention, Conflict and Awareness"

The benefits of perceiving and integrating information across different sensory systems have been profusely described in the past. For example, it is often easier to hear someone’s speech through the noise of a loud bar when we can see their face or, orienting to the blare of an ambulance siren often involves looking for flashing lights. These multisensory phenomena have been extensively studied in the laboratory, but often under simplified conditions, where attention can be easily focused on the relevant stimuli. However, these focused attention conditions in the laboratory are very different from everyday life scenarios, where many relevant and irrelevant sensory events can co-occur within a short time window and perhaps at close locations in space. I will present the results of recent and past studies across various domains of perception that illustrate how endogenous factors, such as selective attention, conflict resolution, or consciousness, can strongly mediate the phenomenological and physiological expression of multisensory integration. Some of these findings suggest that fluctuations in ongoing neural activity may reflect the influence of these top down processes. Altogether, my intention is to conclude that multisensory integration in perception cannot be understood without its interplay with top down processes, and that this interplay may lead to radically different experiences of otherwise similar sensory events.

Thomas Otto (University of St Andrews)

"Trimodal is faster than bimodal and race models can explain it all"

A prominent finding in multisensory research is that reaction times (RTs) are faster to bimodal signals compared to the unimodal components, which is the redundant signals effect (RSE). An intriguing explanation of the effect comes with race models, which assume that responses to bimodal signals are triggered by the faster of two parallel perceptual decision processes. This basic model architecture results in statistical facilitation and the RSE can be predicted based on the RT distributions with unimodal signals and probability summation. To test the explanatory power of the framework, an expansion of the bimodal RSE is that RTs to trimodal signals are even faster. To measure the effect, we presented three unimodal signals (in vision, audition, and touch), all bimodal combinations, and a trimodal condition. To adapt the model, a corresponding extension simply assumes that responses are triggered by the fastest of three parallel decision processes. Following probability summation, the expected RSE can then be computed for any combination of uni- and bimodal conditions, which results in a total of 7 parameter-free predictions that can be tested. Remarkably, the empirical effects follow these predictions overall very well. Race models are consequently a strong and consistent candidate framework to explain the RSE and provide a powerful tool to investigate and understand processing interactions with multimodal signals.

Wendy Adams (University of Southampton)

"Understanding Material Properties from Vision and Touch"

Sensory signals from vision and touch are combined according to standard models of sensory integration, with the predicted benefits in reliability. This integration has been demonstrated across a variety of stimulus dimensions including the geometric properties of size, shape, surface attitude and position. However, visual-haptic integration within the domain of material perception is less well understood. One complication is that visual and haptic signals for materials are often not strictly redundant. For example, very slippery surfaces tend to be glossy, but this is not a perfect correlation. In addition, visual signals to material properties can be confounded by object geometry (e.g. surface curvature) and the prevailing illumination.I will discuss a collection of experiments that reveal the interactions between vision and touch for the estimation of shape, gloss, friction, compliance and roughness.