Four research projects funded by the ANR

Alex Cayco Gajic, Julie Grèzes, Léo Varnet and Christian Lorenzi won an ANR funding for their research projects.

Alex Cayco Gajic is a junior professor at the Group for Neural Theory. She is interested in how different brain regions coordinate for flexible motor control. She won an ANR funding for the project "Explorer l'apprentissage moteur cérébello-cortical avec des réseaux de neurones récurrents".

Accumulating evidence suggests that naturalistic behaviours are driven by interactions between brain regions traditionally studied in isolation. For example, recent work has shown that the cerebellum and the motor cortex, two highly interconnected regions that are each crucial for motor behaviour, coordinate during motor learning and execution. However, progress towards understanding cerebello-cortical interaction has been hindered by the drastically different circuitries of these regions, coupled with the inaccessibility of the deep subcortical pathways connecting them. As a result, it remains unclear how to integrate leading theories of cerebellar and cortical function in motor control.

Recent theoretical and experimental evidence has argued that the motor cortex flexibly generates the spatiotemporal patterns necessary for complex movements by exploiting its rich dynamics. This concept is strongly influenced by the emergence of recurrent neural networks (RNNs), a class of AI-inspired models that can be taught to solve a wide range of motor and cognitive tasks via changes in recurrent connectivity. In contrast, the feedforward, evolutionarily-conserved circuitry of the cerebellum is thought to be optimized for learning sensorimotor relationships and error-based adaptation of movements. Yet this classic view of cerebellar motor control is not able to explain recent evidence of reward information in cerebellar Purkinje cells, nor its mysterious role in cognition.

Alex Cayco proposes to use RNNs to probe how these two distinct neural circuits work together during motor learning. She will extend the traditional neocortical-like RNN architecture to incorporate a cerebellar-like module capturing its divergent-convergent structure and the sparser interregional pathways. She will train this cerebello-cortical RNN (CC-RNN) to perform motor and cognitive tasks using machine learning methods, and will use systems neuroscience and statistical learning tools to analyze the resulting learned structural and functional properties. With this approach, she will address four questions that have been challenging to answer with experiments or traditional circuit modeling: 1) how cerebellar and motor cortical representations co-emerge during learning, 2) how cerebellar adaptation reshapes motor representations in the neocortex, 3) the impact of cerebello-cortical scaling on complex behaviours, and 4) the role of cerebellar adaptation in cognition. More broadly, this project will lead to a fuller understanding of how cortical and subcortical regions interact to produce complex behaviours.

Julie Grèzes is a researcher at the Laboratoire de Neurosciences Cognitives et Computationnelles where she co-directs the "Social Cognition: from Brain to Society" team. She has received funding for the EMOVITA project "How does emotion motivate action".

Humans are able to respond to others' non-verbal signals - such as emotional expressions - in a very rapid and efficient way. Learningto effectively adapt our behaviors to these socio-emotional signals is key to successful interactions and is a good predictor of mental health across the life span. Despite their importance, the mechanisms underlying our action decisions in response to emotional displays are still poorly understood. Automatic reactions to emotional stimuli are commonly believed to be the main constituents ofemotional behavior. Nonetheless, in social contexts individuals may not simply re-act to emotional displays, but use them to flexibly adapt their behavior. The present project will investigate the contribution of goal-directed learning processes to the emergence of adaptive decisions to socio-emotional behavior, pooling together expertise from philosophy of action, affective and computational neuroscience.

The project "Exploration of phonetic representations and their adaptability by the method of Fast Auditory Classification Images (fast-ACI)" by Léo Varnet, Laboratoire des Systèmes Perceptifs in the Audition team, has also obtained funding.

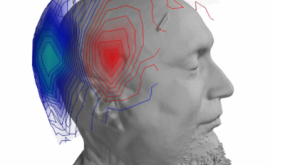

The fast-ACI project aims to develop a robust experimental method to visualize and characterize the auditory mechanisms involved in phoneme recognition. We will first use this technique to map the phonemic representations used by normal-hearing listeners, a long-standing problem in psycholinguistics. The fast-ACI method relies on a stimulus-response model, fitted using advanced machine learning techniques, to produce an instant picture of a participant’s listening strategy in a given context. As such, it is a powerful tool for exploring the adaptability of speech comprehension in the case of (1) sensorineural hearing loss and (2) noisy backgrounds. Particular attention will be paid to the deleterious interaction between the two factors, an issue of prime importance when seeking to reduce the impact of hearing loss on everyday life. The project will ultimately result in the development of a diagnostic tool, allowing audiologists to objectively measure the listening strategy of their patients and design more individually-tailored solutions.

Finally, Christian Lorenzi, researcher in the Audition team of the Laboratoire des Systèmes Perceptifs, has received a funding for the HEARBIODIV project "Auditory Perception of Natural Soundscapes: Hearing Bioversity".

In everyday life, humans engage with various “soundscapes” corresponding to the acoustical patterns produced by biological, geophysical and anthrophonic sound sources. This program aims to assess, clarify and model the sensory mechanisms allowing humans to discriminate auditory textures evoked by soundscapes recorded in nature reserves; in particular, we will assess to which extent and how humans discriminate textures associated with changes in biodiversity. The project will be executed in three stages: (i) Identification of relevant texture statistics for biodiversity assessment; ii) Selection of soundscape recordings in protected areas and analysis; (iii) psychophysical assessment of texture discrimination capacities for humans, focusing on discrimination of soundscapes differing in terms of species richness. Deviations of listeners from performance of automatic classifiers will help us probing information processing and decision statistics used by humans for biodiversity assessment.

MORE INFO

- Alex Cayco's website

- Julie Grèzes'website

- Léo Varnet's website

- Christian Lorenzi's website

- (re) Interview - Christian Lorenzi "Caractérisation des signaux de modulation d'amplitude et de fréquence dans les paysages sonores naturels : une étude pilote sur quatre habitats d'une réserve de biosphère" - DEC News , May 2020

- Agence Nationale pour la Recherche